Definition of Racism in AI

Racism in AI, also known as algorithmic or automated racism, refers to the manifestation of racial bias or discrimination in AI ecosystems, algorithms, or machine learning models. It represents a critical ethical and societal concern where AI tools and technologies, intentionally or unintentionally, produce biased outcomes that disproportionately harm or favor individuals from certain racial or ethnic backgrounds.

Types of Bias in AI

Implicit Bias: Implicit bias in AI refers to the unconscious preferences and stereotypes that can be embedded in algorithms due to biased training data or human input. It can manifest in the form of favoritism or discrimination against certain racial or ethnic groups, perpetuating historical inequalities.

Systemic Bias: Systemic bias in AI occurs when structural inequalities within the AI development process lead to biased outcomes.

This bias can result from biased data collection methods, skewed representation in development teams, or discriminatory practices during algorithm design.

Examples of Racial Bias in AI

Racial bias in AI has been observed in various contexts, including:

AI systems misidentify or disproportionately misclassify individuals from certain racial or ethnic backgrounds, leading to privacy and security concerns.

Sentiment analysis tools exhibit racial bias by associating negative sentiments with specific racial groups, perpetuating harmful stereotypes.

AI algorithms provide different recommendations or diagnoses based on race, potentially leading to unequal healthcare outcomes.

The Impact of Bias in AI

Bias in AI is a big and complicated problem that impacts society and human rights. It happens when AI systems are unfair because they can learn from data that isn't quite right. This unfairness can harm numerous aspects of life from employment and healthcare to sustainable development goals and the justice system. Here are some important things to know regarding this situation:

Unintended Discrimination: Bias in AI can lead to unfair and discriminatory outcomes, particularly against historically marginalized groups. For example, biased algorithms in hiring processes might discriminate against employment candidates based on gender, race, or other protected characteristics.

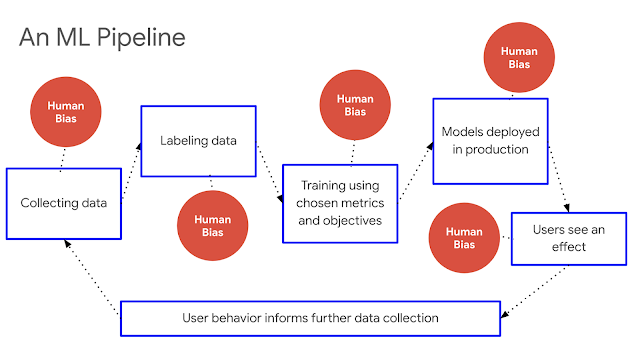

Reinforcement of Existing Biases: AI systems learn from historical data, and if that data is biased, the AI can perpetuate and even amplify those biases. This can lead to a feedback loop, where biased decisions made by AI reinforce existing societal inequalities.

Legal and Ethical Concerns: Discriminatory AI systems may violate anti-discrimination laws and raise questions about responsibility and liability when biased decisions harm individuals.

Criminal Justice: Bias in AI can impact criminal justice systems, leading to unfair sentencing, profiling, or policing practices. Predictive policing algorithms, for example, may target specific communities disproportionately, exacerbating distrust between law enforcement and marginalized communities.

Source: AlgorithmWatch.org, Google apologizes after its Vision AI produced racist results

Strategies for Training AI Against Racism

Training AI systems to be fair, unbiased, and respectful of racial diversity is a complex but crucial endeavor. Here are actionable strategies to mitigate racism from AI technology:

Identify the Bias in Datasets

Bias in AI often starts with biased data. Datasets used to train AI models may inadvertently reflect societal bias. This can result from historical discrimination, cultural stereotypes, or systemic inequalities. Identifying bias in datasets is a crucial first step in addressing AI bias. To avoid this situation, here's the initial steps for AI developers and data scientists:

Ensure that the data faithfully represents the various traits and attributes of the population it intends to cater to.

Deliberately identify underrepresented racial and ethnic groups in your dataset and actively seek out data sources that reflect their diversity. Collaborate with community organizations or experts to acquire comprehensive data.

Conduct Data Collection

Collecting diverse and representative data is a proactive and actionable step that organizations can take to combat racial bias and promote fairness in AI systems. Below is a practical breakdown of how to achieve this:

#1) Define Inclusivity Goals

Begin by setting clear goals for inclusivity in your dataset. Determine the racial, ethnic, and cultural groups that should be adequately represented based on the contexts in which your AI application will operate. Identify any potential underrepresented groups that need special attention.

#2) Broaden Data Sources

To ensure real-world diversity, expand your data sources. Engage with a variety of channels, platforms, and data providers to access a wide range of perspectives. This might include publicly available data, partnerships with organizations, or user-generated content.

#3) Community Involvement

Collaborate with community organizations and advocacy groups representing different racial and ethnic backgrounds. Seek their guidance on data collection strategies and ethical considerations. Involving these communities ensures a more accurate reflection of real-world diversity.

#4) Synthetic Data Generation

In cases where it's challenging to access diverse real-world data, consider synthetic data generation techniques. This involves creating artificial data that simulates the characteristics of underrepresented groups while maintaining privacy and anonymity.

#5) Cross-Cultural Validation

If your AI system is intended for a global audience, validate your dataset across different cultural contexts. What may be considered acceptable or biased in one culture could differ in another, and this validation helps prevent cultural insensitivity.

Algorithmic Fairness

Fairness-aware machine learning refers to the practice of modifying machine learning algorithms to ensure that they do not discriminate against specific racial or ethnic groups, gender, or any other sensitive attributes. The goal is to produce models that provide equitable treatment and opportunities for all individuals, regardless of their background.

#1) Define Fairness Metrics

Before implementing fairness-aware techniques, it's essential to define fairness metrics that align with the goals of diversity, equity, and inclusion principles. Common fairness metrics include disparate impact, equal opportunity, and demographic parity. Choose metrics that are relevant to your specific use case.

#2) Data Preprocessing

Clean and preprocess your dataset to reduce bias as much as possible at the data level. This may involve oversampling underrepresented groups, undersampling overrepresented groups, or creating synthetic data to balance the dataset.

#3) Feature Engineering

Carefully select and engineer features that are relevant to your fairness goals. This may involve creating fairness-related features or redefining existing ones to reduce bias.

#4) Algorithm Selection

Choose machine learning algorithms that are amenable to fairness-aware techniques. Certain algorithms, such as decision trees or logistic regression, can be more interpretable and easier to modify for fairness.

#5) Model Modification

Modify the selected machine learning model to incorporate fairness constraints. This can be achieved by adjusting the model's objective function to include fairness penalties. For example, you may penalize the model for making predictions that disproportionately favor one group over another.

#6) Hyperparameter Tuning

Experiment with hyperparameter settings to find a balance between accuracy and fairness. You may need to trade off some accuracy to achieve a fairer outcome. Grid search or Bayesian optimization can help identify optimal hyperparameters.

#7) Cross-Validation and Evaluation

Utilize cross-validation techniques to assess model performance across different subsets of your data. Evaluate the model using your predefined fairness metrics to ensure it meets the desired fairness criteria.

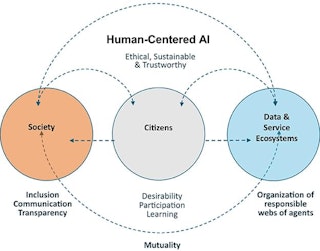

Source: Frontiers in Artificial Intelligence, Human-centricity in AI governance

Human Oversight

Human oversight is crucial for making sure AI is fair and ethical. It means people are actively involved in different parts of AI development to prevent bias and discrimination. To put human oversight into action and make it work well, ensure the following procedures are in place.

#1) Hire a Diverse AI Development Team

Building diverse AI development teams is a crucial step in ensuring that your AI solutions are developed with a wide range of perspectives and experiences. Diversity in the team includes representation from various racial and ethnic backgrounds, gender identities, and other dimensions of diversity.

Recruitment and Inclusivity: Actively recruit team members with different perspectives and varying backgrounds and create an inclusive work culture where all voices are heard and valued.

Sensitivity Training: Provide training and resources to team members on recognizing and mitigating bias in AI systems, including specific considerations related to race and ethnicity.

Collaboration: Encourage collaboration with external experts and organizations with expertise in diversity and inclusion, who can provide guidance on potential sources of bias and discrimination.

#2) Ethical AI Review Boards

Establishing ethical AI review boards involves assembling groups of experts with diverse backgrounds, including ethicists, sociologists, and domain specialists. These boards are responsible for evaluating AI systems for ethical considerations, including those related to racism and discrimination.

Board Formation: Create a board with members from diverse racial and ethnic backgrounds, gender identities, and expertise in AI ethics and fairness.

Regular Reviews: Schedule periodic reviews of AI models, algorithms, and policies, specifically looking for biases and discriminatory outcomes.

Feedback Mechanisms: Establish channels for the board to receive feedback from users and stakeholders regarding concerns related to racism or discrimination in AI systems.

#3) Continuous Monitoring and Evaluation

Ongoing assessment involves continuously monitoring the performance of AI systems in real-time. This process entails looking for signs of bias or discriminatory behavior based on predefined fairness metrics.

Real-time Monitoring: Implement automated systems that continuously monitor AI predictions, interactions, and outcomes for fairness and bias.

Fairness Metrics: Define and regularly evaluate fairness metrics relevant to your specific use case. Implement thresholds for these metrics to trigger alerts when potential biases are detected.

Regular Reports: Generate regular reports on the performance of AI systems, highlighting any disparities or concerns related to race and ethnicity. Share these reports with the AI development team and ethical review boards.

#4) Feedback Loops for Improvement

Feedback loops are essential for iteratively improving AI systems. They provide mechanisms for users and stakeholders to report concerns about biased or unfair AI behavior, facilitating ongoing refinement.

User Reporting: Create user-friendly channels, such as reporting buttons or forms, for users to report biased or discriminatory content or interactions with AI systems.

Dedicated Response Team: Assign a dedicated team responsible for reviewing user reports promptly and taking corrective actions when necessary.

Transparency and Communication: Communicate with users about the outcomes of their reports and any actions taken to address concerns. This transparency builds trust and encourages continued feedback.

#5) Informing Users about AI-Driven Content Recommendations

Educating users about AI-driven content recommendations involves transparently informing them that algorithms are responsible for tailoring content suggestions. It helps users understand the personalized nature of their online experiences and fosters trust in AI systems.

Onboarding Notifications: Implement onboarding notifications or pop-ups that explain how AI-driven content recommendations work on the website. Describe how user data is used to personalize content.

Privacy Policy and FAQs: Include a user-friendly privacy policy that outlines data usage for AI recommendations. Provide answers to frequently asked questions (FAQs) regarding AI and user data to address common concerns.

Personalization Controls: Give users the option to customize their AI-driven content recommendations. Allow them to adjust preferences or opt out of AI personalization if they desire.

#6) Promote Critical Thinking and Awareness

Encouraging users to think critically about the content they consume and raising awareness about potential biases and stereotypes is essential. This education empowers users to recognize and challenge biased content.

Develop and provide educational resources, such as articles, videos, or infographics, that teach users how to critically evaluate online content. Include guidance on identifying potential bias or misinformation.

Showcase content created by diverse voices and perspectives. Highlight user-generated content that contributes to a more inclusive online community.

Foster a sense of community and dialogue among users. Encourage discussions around diversity, equity, and inclusion topics, both on and off the website.

Enable users to report content that they believe is biased, offensive, or harmful. Provide clear guidelines on what constitutes a reportable issue and how to submit reports.

Legal Compliance

Ensuring that the AI ecosystem adheres to pertinent anti-discrimination laws and regulations is a fundamental measure in combating racial bias and discrimination. Here are the governance steps you should consider:

#1) Compliance Verification

Start by conducting a comprehensive review of anti-discrimination laws and regulations applicable to your AI system's context. This includes but is not limited to legislation like the Civil Rights Act, Fair Housing Act, and similar regional or national laws. It is necessary that your AI ecosystem aligns with the legal framework established to protect individuals from racial and ethnic discrimination.

#2) Legal Audits

Regularly audit your AI system from a legal perspective. Collaborate with specialized legal experts well-versed in technology, data policy, and anti-discrimination law to identify potential discriminatory features, practices, or outcomes. These audits should encompass the entire lifecycle of the AI system, from data collection and model training to deployment and ongoing use.

#3) Transparency in Compliance

Maintain transparent documentation of the legal compliance efforts undertaken for your AI system. Record the steps taken to identify and rectify discriminatory features, as well as any legal guidance received.

#4) Stakeholder Communication

Foster clear and open communication with stakeholders, including legal authorities, regarding your AI system's compliance with anti-discrimination laws. Promptly address any inquiries or concerns raised by regulatory bodies or affected individuals, emphasizing your commitment to upholding legal standards.

#5) Continuous Monitoring

Recognize that legal compliance is an ongoing process. Regularly review and update your AI system to ensure it remains in accordance with evolving anti-discrimination laws and regulations. Stay informed about changes in legal requirements and adapt your practices accordingly.

Additional Resources

AI Ethics Guidelines and Frameworks

IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems: An initiative focused on developing ethical guidelines and standards for autonomous and intelligent systems, including AI, to ensure they align with ethical principles.

The Principles of Ethical AI by The Future of Life Institute: A set of principles and guidelines outlining ethical considerations for the development and deployment of artificial intelligence systems.

RESPECT AI: RESPECT AI is an initiative that focuses on responsible, ethical AI development. It offers resources, case studies, and guidelines for addressing bias and discrimination in AI.

Guidelines for Data Collection and Use by Algorithmic Impact Assessments: A resource providing guidelines and best practices for responsible data collection and use, particularly in the context of algorithmic impact assessments.

OpenAI's Data Usage Policy: OpenAI's policy outlining how data is collected, used, and shared in AI development to ensure ethical and responsible practices.

Resource Tools

AI Fairness 360 Toolkit by IBM: A toolkit offering various algorithms and metrics for detecting and mitigating bias and ensuring fairness in AI systems.

Aequitas Toolkit: A toolkit designed to assess bias and fairness in machine learning models and help organizations make equitable decisions.

Fairlearn by Microsoft: Fairlearn is an open-source Python library developed by Microsoft that focuses on fairness and bias mitigation in machine learning. It offers practical tools and guides for model fairness assessments and interventions.

IBM Explainable AI Toolbox: Part of the AI Fairness 360 ecosystem, the Explainable AI Toolbox provides tools for model explainability and fairness. It assists in understanding and addressing bias in AI models.

OpenAI's GPT-3 Playground with Moderation API: OpenAI's GPT-3 Playground showcases the use of AI language models while highlighting the importance of moderation for avoiding biased or harmful outputs. It provides an example of responsible AI deployment.